There was a time when video editing felt like digital manual labour. You would sit in a dark room for ten hours, squinting at a timeline, just to cut out “ums” and “uhs.”

Then you would spend another five hours trying to mask out a stray power line in the background. It was a process defined by technical endurance rather than creative vision.

Things have shifted recently. We are moving away from the era of “pixel pushing” and entering the era of creative direction.

Learning how to use AI for Video editing is no longer a futuristic hobby for tech enthusiasts. It has become the primary way professional creators maintain their sanity and their margins.

The Shift from Technician to Director

In the past, the editor was the person who knew which buttons to press. Today, the editor is the person who knows which questions to ask the machine.

According to research from Virtue Market Research, the global AI video editing market is projected to reach $9.3 billion by 2030 (Virtue Market Research, 2025).

This growth is fueled by a simple reality: creators are being asked to produce more content than ever before. If you are still doing everything by hand, you are essentially competing with a calculator using an abacus.

The true power of these tools lies in their ability to handle the “drudgery.” This frees you up to focus on the pacing, the emotional arc, and the story.

As the team at Bottle Rocket Media puts it, “AI doesn’t just save time, it saves money, too” (Bottle Rocket Media, 2024). By automating the repetitive parts, you turn saved hours into better storytelling.

Think of it as having a highly skilled assistant who never gets tired of doing the boring stuff. You provide the vision; the assistant provides the labor.

Phase 1: The Automated First Pass

The “Rough Cut” is often the most exhausting part of the process. It involves sifting through hours of raw footage to find the few gems worth keeping.

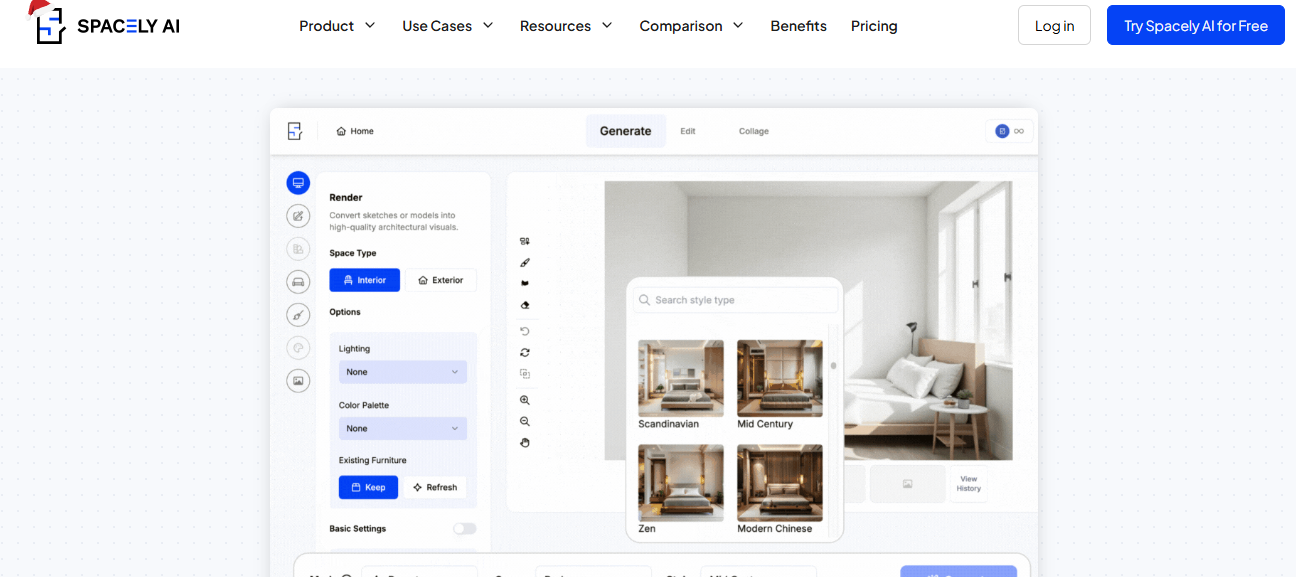

This is where text-based editing has completely changed the game. Tools like Descript and the latest updates in Adobe Premiere Pro allow you to edit video by editing a transcript.

Instead of looking at blue waveforms on a timeline, you look at a document. If you delete a sentence in the text, the corresponding video clip is deleted automatically.

Eliminating the Silence

Manual “jump cutting” used to take forever. Now, you can use a “Remove Silence” feature that identifies gaps in speech and ripples the timeline together in one click.

This is particularly useful for talking-head videos or podcasts where pacing is vital. It ensures the flow of information remains tight without requiring manual trimming.

Removing Filler Words

We all have verbal ticks. “You know,” “like,” and “actually” can clutter a performance and make a speaker sound less confident.

Modern AI models can identify these specific filler words across an entire project. With one command, you can strip them all out, leaving a clean, professional delivery.

This process, which once took a full afternoon, now takes about thirty seconds. It allows you to get to the “creative” part of the edit much faster.

Phase 2: Visual Refinement and Masking

Once the structure is set, you usually move into the visual cleanup phase. This used to involve the dreaded task of rotoscoping.

Rotoscoping is a technical term for drawing a mask around an object, frame by frame, to separate it from its background. If a person walks in front of a tree, you’d have to manually outline them 24 times for every second of footage.

AI tools like DaVinci Resolve’s “Magic Mask” or Runway’s “Green Screen” tool have automated this entirely. You simply click on the subject once.

The AI then tracks that person or object through the entire shot. It understands depth, lighting changes, and motion blur to keep the mask accurate.

Generative Fill and Object Removal

We have all had a “perfect” shot ruined by a piece of trash on the ground or a logo we don’t have the rights to show. In the old days, you’d just have to live with it.

Now, you can use generative fill to remove objects. The AI looks at the surrounding pixels and “hallucinates” what should be behind the object you want to disappear.

This is powered by something called Latent Diffusion. This is a complex mathematical process where the AI starts with digital noise and gradually refines it into a realistic image based on its training.

Generative Extend

Have you ever needed a clip to be just two seconds longer to hit a beat in the music? But the cameraman stopped recording too early?

New features, such as “Generative Extend” in Premiere Pro, can actually synthesize new frames at the end of a clip. It mimics the movement and lighting of the original footage.

This creates a seamless extension that didn’t exist in reality. It is a lifesaver for editors who are working with limited raw assets.

Phase 3: Mastering Audio with Precision

Bad audio will ruin a video faster than bad visuals. Viewers will tolerate a grainy 1080p image, but they will turn off a video with distracting background hiss.

Using AI for Video editing includes a heavy focus on “Audio Restoration.”

Tools like Adobe Podcast or Waves Clarity can take a recording made on a cheap phone and make it sound like it was recorded in a studio.

These tools don’t just “turn down the noise.” They actually reconstruct the human voice by identifying the specific frequencies of speech.

Voice Cloning for “Pickups”

Imagine you finished a whole edit, only to realize you mispronounced the client’s name in one sentence. Normally, you’d have to set up the mic and record a “pickup” shot.

Now, you can use a digital “Digital Twin” of your voice. You simply type the correct word into the software, and it generates the audio using your specific tone and inflection.

This is perfect for small corrections. It ensures the audio remains consistent throughout the project without requiring a new recording session.

AI Music Remixing

Finding the right track is hard, but making that track fit the length of your video is harder. You usually have to cut the song and hope the transition isn’t jarring.

Modern “Remix” tools can analyze a song’s structure: its beats, its chorus, and its energy levels. You then tell the software exactly how long the song needs to be.

The AI will then rearrange the segments of the song to hit that exact duration. It creates a custom version of the track that ends perfectly on your final frame.

Phase 4: Localization and Accessibility

If you are only publishing in English, you are ignoring about 80% of the world. Global reach is the new standard for digital content.

AI-driven transcription has reached a level of accuracy that was unthinkable five years ago. High-end tools now boast a 98% accuracy rate (Gling.ai, 2026).

But it goes beyond just captions. We are now seeing “AI Dubbing” that can translate your speech into Spanish, Mandarin, or French.

Lip-Syncing for Dubs

The “uncanny valley” of dubbed movies usually comes from the fact that the lips don’t match the new language. New AI models are solving this by re-animating the speaker’s mouth.

The software adjusts the facial movements of the person on screen to match the phonemes of the new language. This makes the translation feel natural and native.

Automated Social Clipping

Once your long-form video is done, the work is only half-finished. Now you need to create “Shorts,” “Reels,” and “TikToks” to promote it.

Tools like Opus Clip or Munch can analyze your full video and identify the “hooks.” They look for high-energy moments, interesting quotes, or visual shifts.

The AI then automatically crops the video into a vertical 9:16 format. It even adds dynamic, animated captions that highlight keywords to keep viewers engaged.

Why the Human Touch Still Matters

With all this automation, people often wonder if the “Editor” will become obsolete. The answer is a firm no.

AI is incredibly good at execution, but it is fundamentally terrible at “taste.” It doesn’t know why a specific pause makes a viewer feel sad.

It doesn’t understand irony, sarcasm, or the subtle subversion of a genre. These are deeply human traits that require a soul to communicate.

The goal of utilizing AI for Video editing is to remove the “How” so you can focus on the “Why.” You use the tools to handle the math so you can focus on the art.

Expert editor and consultant Vince Opra suggests that the best way to start is to “follow all four phases on a simple project” before moving to high-stakes work (YouTube, 2026).

The best editors of the future won’t be the ones who can mask the best. They will be the ones who can best orchestrate these technological “workers” to tell a story that feels authentic.

Strategic Steps to Start Today

If you are looking to integrate these tools into your workflow, don’t try to change everything at once. Start with the biggest bottleneck.

Step 1: Audit Your Time

Track your next project. Where do you spend the most “unthinking” time? Is it captioning? Is it color correction?

Pick one tool that solves that specific problem. If you hate transcribing, try Descript. If you struggle with color, look into Colorlab AI.

Step 2: Build Templates

AI works best when it has a framework. Once you find a caption style or a color grade you like, save it as a “Preset.”

Consistency is what builds a brand. Use the AI to replicate your high standards across every piece of content you produce.

Step 3: Test and Refine

Never trust the AI 100%. Always review the automated captions for “hallucinations” (where the AI makes up a word it didn’t understand).

The AI is your co-pilot, not the captain. You should always have the final say on the “Export” button.

Summary of Key AI Editing Concepts

| Concept | Simple Explanation |

| Text-Based Editing | Editing video by deleting or moving words in a text transcript. |

| Rotoscoping | Cutting an object out of its background to apply effects or change the setting. |

| Generative Extend | Creating brand-new video frames to make a clip longer than it actually is. |

| Voice Cloning | Creating a digital version of your voice to fix mistakes in your audio. |

| Short-Form Extraction | Using AI to find the “viral” moments in a long video and auto-format them for social media. |

Conclusion

The landscape of video production has changed forever. We are no longer limited by how fast we can click a mouse or how many frames we can manually mask.

By embracing these tools, you are reclaiming your time. You are shifting your energy from the “drudgery” of technical execution to the “joy” of creative discovery.

Whether you are a solo YouTuber or a professional filmmaker, the goal remains the same: connecting with an audience through a story.

AI is simply the newest, sharpest tool in your kit to help that story shine. The technology is here to assist you, not replace the spark of genius that only you can provide.

Would you like me to create a customized list of AI tools specifically tailored to your current hardware and the type of content you create?

Frequently Asked Questions

Can AI edit an entire video from start to finish without help?

While some tools can generate a “rough cut” based on a script, they still lack a sense of timing and emotional nuance. You still need a human to refine the pacing and ensure the story makes sense.

Is AI video editing expensive for beginners?

Not necessarily. Many powerful tools like CapCut or the basic versions of Descript are free or very affordable. High-end professional suites like Premiere Pro require a subscription, but they often pay for themselves in time saved.

Will using AI make my videos look generic?

Only if you rely solely on templates. If you use AI to handle the technical tasks and use your own “creative eye” for the final polish, your work will remain unique and authentic.

Does AI video editing require a powerful computer?

Many modern AI tools are “cloud-based,” meaning the heavy processing happens on a remote server. This allows you to edit high-quality video even on a standard laptop or a tablet.

What is the best way to learn these new tools?

The best way is to “learn by doing.” Take a small project and try to complete it using at least one AI-powered feature. There are also thousands of free tutorials available on platforms like YouTube.

Can AI help with color grading?

Yes, tools like Colorlab AI or DaVinci Resolve’s AI features can match colors across different cameras automatically. This ensures your video looks consistent even if you shot it on different days or with different gear.

Is it ethical to use AI to change someone’s voice or face?

Ethical use requires consent. You should always have the permission of the person whose likeness you are altering. In professional settings, this is usually covered by a “digital likeness” release form.

How does AI improve video SEO?

By automatically generating accurate transcripts and captions, AI makes your video content “searchable” by text-based search engines. This helps your videos show up in results for specific questions or keywords.

About the Author

Nany Powell is an AI expert with 12+ Years of experience in AI Projects.